Current methods of eye movement tracking have limited control and precision

Existing eye-tracking methods include skin-mounted sensors and camera-based image analysis. While skin-mounted systems, such as electrooculography (EOG), show promise in helping people with disabilities with everyday activities, they are susceptible to data loss caused by user movements and ambient noise that adversely impact sensor contact with the skin. This limits precise detection of eye angles and gaze, hampering the use of eye motions for persistent human-machine interfaces (HMIs). As a result, these systems can only be used to perform simple actions such as unidirectional motions in drones and wheelchairs.

To overcome these limitations, machine learning has been applied to video monitoring using commercially available eye trackers that use image analysis. However, eye trackers used in this context have limited functions, can only track gaze, and can also be adversely affected by lighting. Adding control via software is expensive and requires complicated eye movements that can cause extreme eye fatigue.

Two-camera eye-tracking system with embedded machine-learning algorithm provides accurate eye movement detection

This two-camera eye-tracking system (TCES) integrates a commercial eye tracker with machine-learning technology for continuous real-time classification of gaze and eye directions to precisely control a robotic arm and enable persistent HMI.

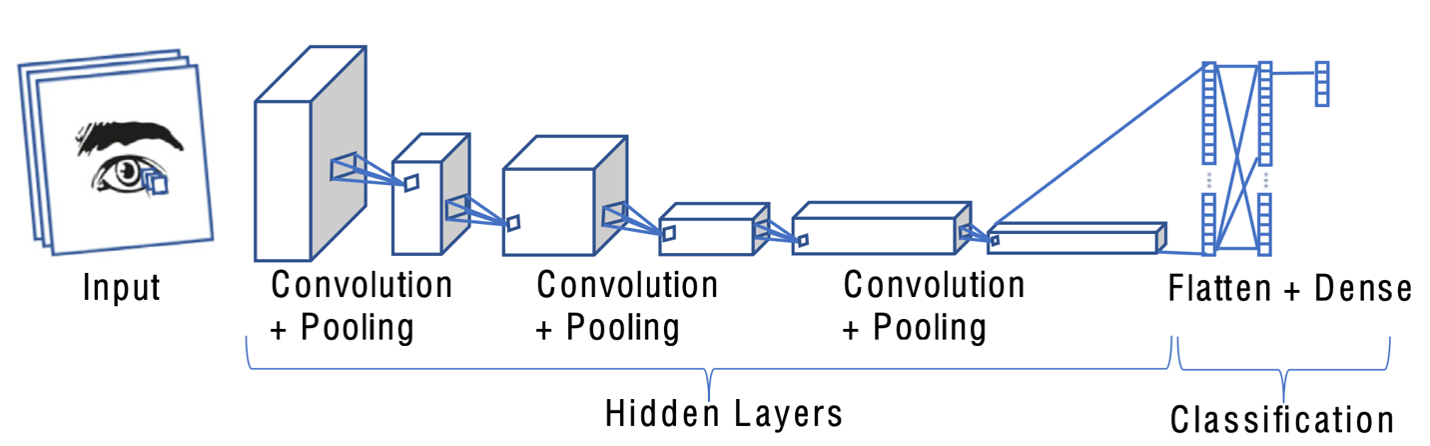

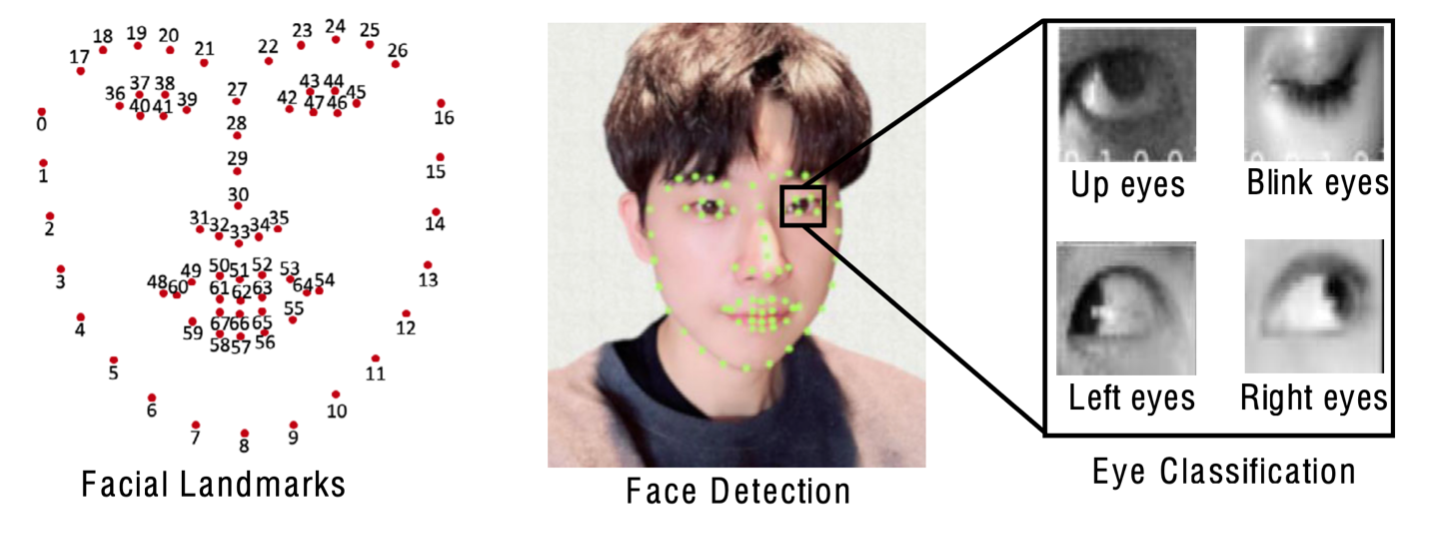

The machine-learning technology is a convolutional neural network (CNN) that detects eye directions with two webcams and classifies movement using a deep-learning algorithm. The system trains the algorithm using hundreds of eye images to classify eye directions, such as up, blink, left, and right. It tracks gaze using the pupil center-corneal reflection (PCCR) method with a commercial eye tracker, which acts as a trigger for the all-in-one interface.

Control software enables the user’s eye to work like a computer mouse, controlling movement by looking at multiple grids and issuing eye commands to show intention. With its all-in-one interface, TCES enables eye control of 32 grids with two versions of actions (grip and release) per grid. This allows the user to issue numerous commands (e.g., to a robotic arm) within an operating range without the risk of losing control.

Using simple eye movements, the TCES offers low-cost, high-precision HMI control with potential applications for people with disabilities, remote control of surgery robots, warehouse systems, construction tools, and more.

- Exceptional accuracy: The webcam and PCCR-based commercial eye tracker in conjunction with the deep-learning CNN enables highly accurate classification for four directions of eye movement (up, blink, left, and right) and has achieved 99.99% accuracy in studies.

- Highest performance: Unlike existing systems that control only 6–9 limited actions, this technology has confirmed more than 64 actions per command, offering significantly higher performance than other systems.

- Cost effective: Integrating machine-learning technology with a commercial eye-tracking system and inexpensive webcams lowers costs.

- Improved efficiency: The all-in-one interface offers hands-free control of a robotic arm with a high degree of freedom without requiring other input action, such as from a user's hands.

- Versatile: The system’s supervisory control and data acquisition architecture can be universally applied to any screen-based HMI task.

- Assistance for people with disabilities that prevent hand movements. Eye movements can be used to perform daily activities, call for help, control a hospital bed, and more.

- Robot-assisted surgery, providing extra maneuvering tools when both hands are occupied as well as enabling solo surgeries when there is no assistant. Additionally, this system could support rapid and efficient robot-assisted upper-airway endoscopy.

- Improved efficiency for controlling equipment at construction sites or warehouses where workers may be exposed to dangers (e.g., automating repetitive tasks and controlling heavy equipment to move heavy boxes, unload trucks, build pallets of boxes, and pull orders).

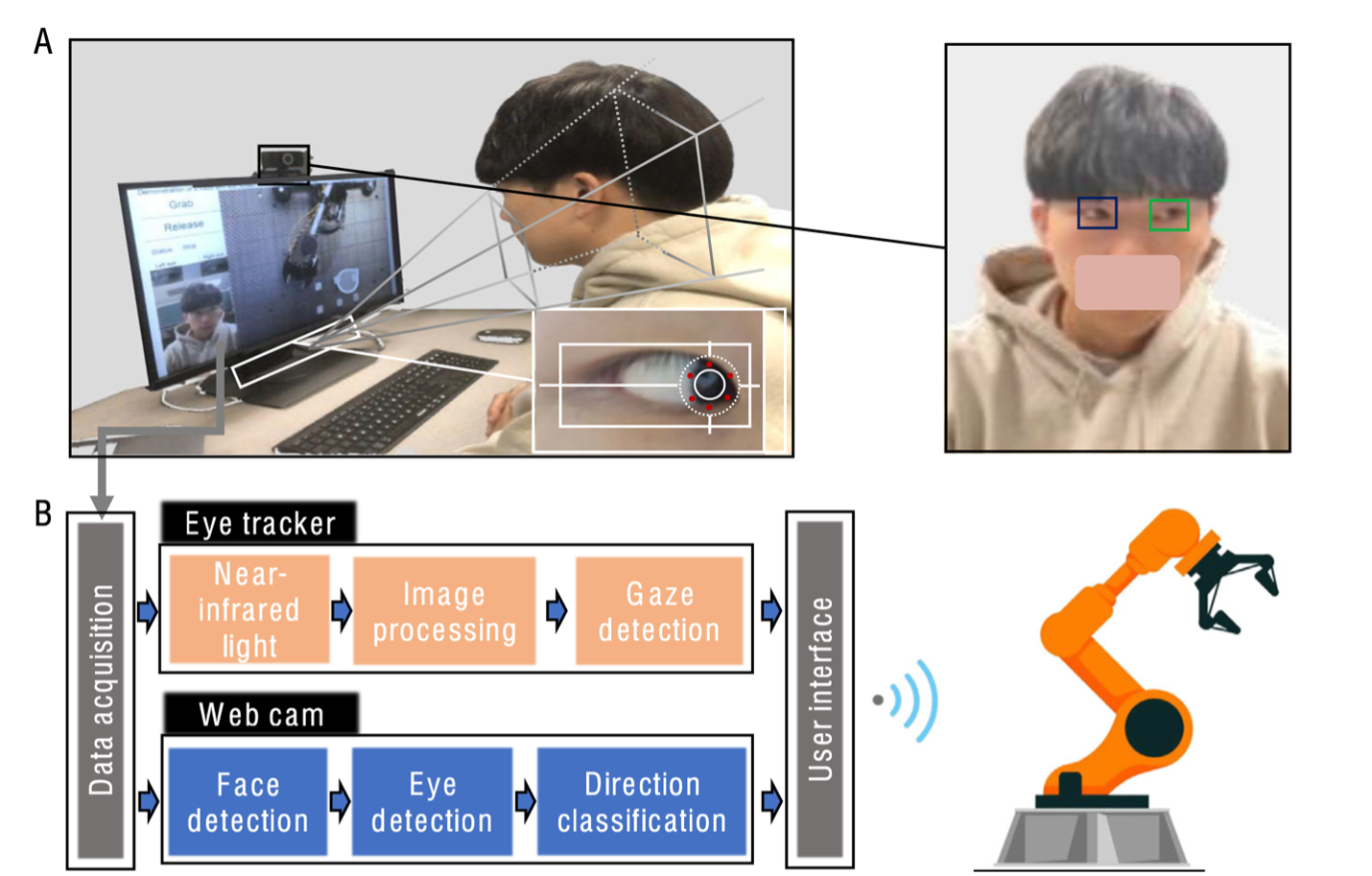

System overview of HMI system using a screen-based, hands-free eye tracker. A) Subject is using the eye-tracking interface to control a robotic arm; (left) the screen-based hands-free system and (right) a frontal photo from the webcam. B) Flowchart showing the sequence from data recording (eye movements) with two devices (webcam and eye tracker) for robotic arm control.

Deep-learning algorithm CNN for image processing and performance results. CNN model with hidden layers and classification outcomes.

Facial landmarks identified with numbering (left) and an example of face detection (right) of a subject while classifying each eye’s movement.