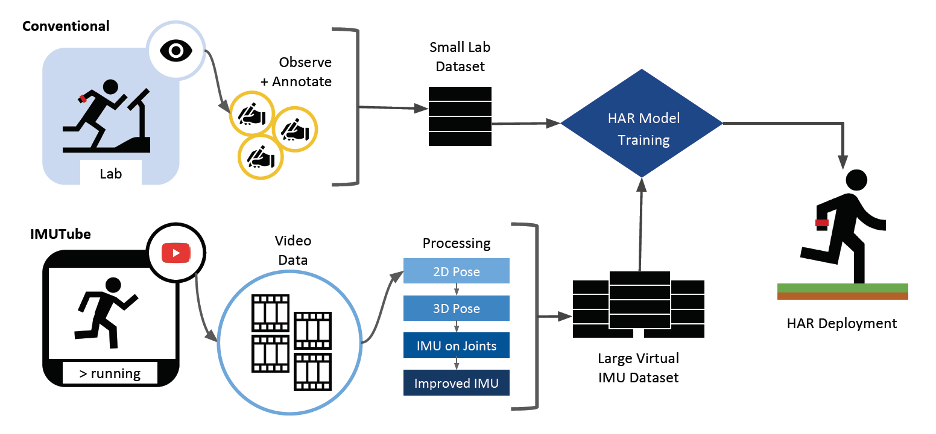

- It applies standard pose tracking and 3D scene understanding techniques to estimate full 3D human motion from a video segment that captures a target activity.

- It translates the visual tracking information into virtual motion sensors that are “placed” on dedicated body positions.

- It adapts the virtual sensors’ IMU data towards the target domain through distribution matching.

- It derives activity recognizers from the generated virtual sensor data, which can be augmented with small amounts of real sensor data in some cases.

- Automated: Automates wearable sensor data collection, which can in turn be used to train HAR models by simply giving them access to large-scale video repositories

- Accurate: Demonstrated recognition accuracy in some cases comparable to models trained only with non-virtual (“real”) sensor data in benchmark studies

- Superior: Outperformed models using real sensor data alone by adding only small amounts of real sensor data to the virtual sensor dataset in benchmark studies

- Economical: Has the potential to replace costly, time-consuming, error-prone efforts involved in collecting non-virtual sensor data from people in real life

- Revolutionary: Offers the possibility of substantially increasing the volume of available movement data, catching up to advances made in complementary fields such as speech recognition and language processing

- User authentication

- Health care

- Fitness and wellness

- Security

- Other fields in which tracking of everyday activities may be beneficial

On-body sensor-based human activity recognition systems have lagged behind other fields in terms of large breakthroughs in recognition accuracy. In fields such as speech recognition, natural language processing, and computer vision, it is possible to collect huge amounts of labeled data—the key for deriving robust recognition models that strongly generalize across application boundaries. By contrast, collecting large-scale, labeled data sets in sensor-based HAR has been limited. Labeled data in HAR is scarce and hard to obtain, sensor data collection is expensive, and the annotation is time-consuming and sometimes even impossible for privacy or other practical reasons. As such, the scale of typical datasets remains small, covering only limited sets of activities. With further research and collaboration among HAR, signal processing, and computer vision communities, IMUTube may directly address these shortcomings of the field, leading to exponential increases in the amount of movement data available.

IMUTube has the potential to replace the conventional data recording and annotation protocol (upper left) for developing sensor-based human activity recognition (HAR) systems. Georgia Tech’s system (bottom) uses existing, large-scale video repositories from which it generates virtual IMU data that are then used for training the HAR system.