Existing eye-tracking systems lack real-time application capability

Eye tracking measures eye movements, eye positions, and points of gaze to identify and monitor an individual’s visual attention with respect to location, objects, and duration. It is critical for enabling human-to-machine interactions, such as VR/AR applications. Existing eye tracking systems struggle to achieve the necessary real-time performance for supporting frequent and substantial human-machine interactions on mobile AR/VR devices with limited computing resources.

There are currently three main limitations. First, VR/AR devices use bulky, lens-based cameras that create a wide gap between the camera and the backend processor, hindering efforts to reduce the overall device size and significantly slowing communication time. Second, the data captured by these systems contain an enormous level of needless and redundant information, for example, only a small portion of what is recorded represents the human eye’s visual field. Finally, state-of-the-art eye tracking relies on deep neural network–based eye segmentation and gaze estimation that requires an enormous amount of processing speed and storage capacity.

Developing a more efficient and accelerated eye-tracking system to address these issues will broaden the application field.

Using a lens-less camera increases processing speed, privacy, and comfort

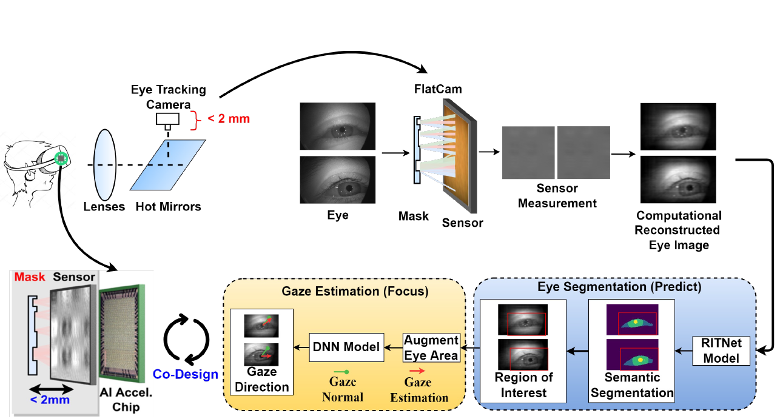

EyeCoD is a unique lens-free, FlatCam-based eye-tracking algorithm and accelerator system in a co-design (CoD) framework that seeks to provide real-time processing speed (>240 frames per second [FPS]), reduced device size, and visual privacy under a stringent power consumption budget (e.g., milliwatt (mW) power consumption).

The hardware developed for this technology is an ultra-compact, intelligent camera system, dubbed i-FlatCam. It incorporates a lens-free FlatCam instead of a lens-based camera.

This results in a thinner, lighter device that is more comfortable to wear. In addition, this allows the positioning of the back-end eye tracking processor closer to the front-end camera and, with the aid of an accelerator, increases the system’s speed by reducing communication time. The system also improves privacy by decreasing the eye tracking field as well as capturing unclear images in its phase mask.

The algorithm developed for this system uses a “predict-then-focus” approach. First, it determines the important areas in an image, like where a person's eyes are, using a process called "eye semantic segmentation." Then, instead of looking at the whole picture, it focuses only on those important areas to guess where someone is looking. This way, it saves a lot of time and effort by not doing unnecessary calculations and handling unnecessary data. The algorithm, combined with a dedicated artificial intelligence (AI) accelerator chip attached to the FlatCam, allows 4X higher throughput for 300+ FPS to rapidly and accurately capture images.

Hardware and algorithm innovations are integrated into all levels of this system and operate collectively to create a real-time eye-tracking system.

- Faster processing: EyeCoD uses a dedicated AI accelerator chip with 4X higher throughput that can achieve over 300 FPS compared to 30-80 FPS with lens-based eye-tracking systems.

- Faster communication time: Using a FlatCam-based system allows the back-end processor to be placed closer to the camera, reducing communication time.

- Highly accurate: This system uses a “predict-then-focus” model for accurate image reconstruction.

- More comfortable: This lens-less system allows 5–10X smaller, lighter sensors in the wearable device.

- Greater privacy: The closer proximity of the processor and camera of the device and the included phase mask of the lens-less FlatCam improve the visual privacy of this system.

- Next-generation mobile computing platforms (e.g., smart glasses)

- Gaming systems

Block diagram depicting the workflow of EyeCoD’s systems and methods